- Incorta Community

- Discussions

- Administrative Discussions

- Re: Upper limit on datasets for a table ( and how ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Upper limit on datasets for a table ( and how are the processed ) ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-22-2023 11:27 AM

I have a scenario in front of me where I have a bunch of identical ( columns ) Incorta tables -- i.e. I have a table for each month of the year for the past 2+ years which I want to consolidate into one big table w/ all the data inside.

Monthly tables: Pulled from original source. Broken into monthly tables because they return exponentially faster that way.

Consolidated table: IncortaSQL apparently does not support doing a big UNION or UNION ALL and we were getting memory errors using SQLi with the big UNION statement.

What *IS* working is having all the month tables as separate datasets via SQLi - and it's pretty fast!

That said, we must remain optimistic and assume the calendar will not stop.

So we will need to keep adding month tables as we go ( we have 2023 covered, w/ July forward obvs not having any data )

So - finally - the question: Is there a limit to the number of datasets which Incorta will support?

Corollary question - how are these processed? Does Incorta send 36 requests ( to itself in this case ) at the same time? Is that governed by the parallel job tasks config in the CMC? I can't imagine they are all done in series, although there must be some staging somewhere w/in the mix because I am having Incorta enforce primary key constraints.

I'm very excited about this - give me some good news Community members! 😉

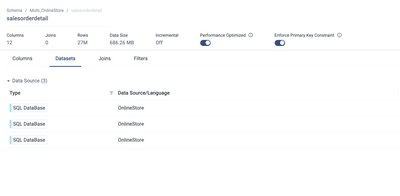

Screen cap - 3 of 30! datasets ( 2021 combines Jan-Jun as they were relatively low volume months )

- Labels:

-

Data Connectors

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-23-2023 08:55 AM

I'll answer the corrolary question.

The "staging" or primary key enforcement happens during the load/post-load phase so there should be parallelization across the different datasets and the configuration of the loader node (# of CPUs dedicated to loader, etc.) and parallelization in CMC should drive that.

However, because the queries themselves are hitting the Incorta Analytics service....the SQL interface/Analytics node could decide to queue up query execution on that end even though the client aka its own loader service may have issued a set of queries (8, 12, 16, whatever) in parallel.

I don't know of any limit on datasets per table (probably a theoretical one?) so maybe someone from Engineering can weigh in. Fun fact: the first Proof of Concept I ever did @ Incorta (2017?) was 50 different manufacturing instances (1 per factory) that we poured into a single schema/table structure so it's definitely built since the beginning to handle this type of use case.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-23-2023 09:00 AM

And here's the parallelization in action with OnlineStore (quickest example I could pull up):

So, query the largest table (salesorderdetail) 3 different ways making the 9 million row table into a 27 million row table.

And that extracts in 41 seconds:

And a single query to that table extracts in 30 seconds:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-23-2023 01:18 PM

@RADSr I am assuming your historical data (data for previous months) is also getting updated that's why you are doing full load where you load data for all the months using the approach you described.

If that is not the case and data for previous months do not change, then there are other ways to manage this load.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-25-2023 12:41 AM

The multi-source table is a good solution in this case, but is there a reason you must extract them in separate Incorta tables then glue them using a multi-source table through SQLi? I believe it will be better if you create a multi-source table where each dataset extracts from source directly, this way, you reduce one step (re-extraction through SQLi) which will save cluster resources.

As for the limit of number of data sets, I believe there's no limit. I have seen customer use cases where there were 25+ data sets in a table and I've heard of 60. Technically there's no limit. But you have to make sure the loader service has enough resources to execute.

When you load a table with multiple data sets, the loader will try to extract the data set in parallel. This is governed by a thread pool for all data sets being extracted across the load job, which may contain other tables. So, expect parallel execution of the queries against your source.

In the CMC, you can set the number of parallel load jobs, but this is for the whole load jobs (the ones you set in the scheduler), which are usually schemas. The capacity of the thread-pool used to extract tables and data sets depends on the CPU Utilization percentage you set for the loader service. The higher the percentage, the more tables (and data sets) can be extracted in parallel.