- Incorta Community

- Discussions

- Data & Schema Discussions

- Re: how to convert date to string

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

how to convert date to string

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-01-2024 02:59 AM

Hi,

I have two columns , one is timestamp and another one is string. I want to join these two columns in spark sql mv.

Date_effective column is timestamp with "yyyy-mm-dd hh:mm:ss" format.

Period_name column is string with "mm-yy" format. I want write below oracle query in Sparl sql, could you please let me know how to write?

Oracle query join: to_char(table_name.date_effective,'mm-yy')=table_name.period_name.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-01-2024 01:04 PM

Here is how I got it to work:

from pyspark.sql.functions import col, expr

df1 = read("[datestable]")

df2 = read("[periodstable]")

df1 = df1.withColumn("month_year", expr("substring(date, 6, 2) || '-' || substring(date, 3, 2)"))

joined_df = df1.join(df2, "month_year")

joined_df.show()- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-02-2024 10:53 AM

My prior replies got bounced ( and @JoeM gave a good answer already below ) but I'm going to try, try again...

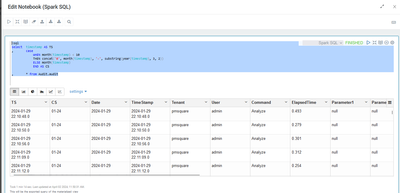

Here's a quick MV snip using SPARK SQL which will get you where you want to go.

That said and pro tip - having the period_name integrated into your date dimension will make this easier. Build out your date dimension!

===================

%sql

select timestamp AS TS

, case

WHEN month(timestamp) < 10

THEN concat('0', month(timestamp), '-', substring(year(timestamp), 3, 2))

ELSE month(timestamp)

END AS CS

, * from Audit.audit

=================

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-04-2024 06:15 AM

- how to convert date to string in Data & Schema Discussions

- Parse Timestamp in Dashboards & Analytics Discussions

- weeknum function bad results in Dashboards & Analytics Discussions

- How do I convert Julian date (stored as Integer) to proper date/timestamp format in Dashboards & Analytics Discussions

- '003338366400' converts to 2147483647 in Dashboards & Analytics Discussions