- Incorta Community

- Discussions

- Data & Schema Discussions

- PySpark log stdout

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-13-2023 01:47 AM

I am building materialized view with Python Spark and since I am very new to Python I am struggling a little bit. In order to do some debugging I would like to be able to log some hints from the code, most likely in stdout (but I will be happy with any log that is accessible from Spark Master or Incorta itself). I tried few methods I found on Stack Overflow, but none of them worked. Any suggestions?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-24-2023 05:40 AM - edited 10-24-2023 05:42 AM

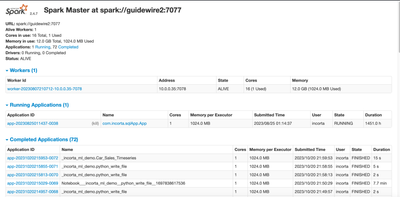

If you are using Incorta on-prem, you can access the Spark webUI for the Spark standalone cluster. It depends on your Spark configuration. it is typically runnning under the spark machine with the port 9091. It looks like this page:

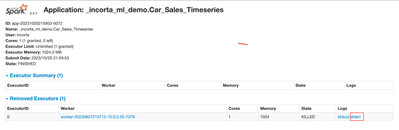

You can then click on the application ID to access the Application page like below.

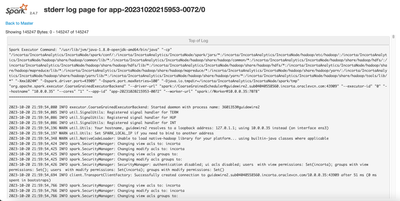

The log file is accessible by clicking on the stderr link and the log looks like this page:

If you are using Incorta Cloud.

The Spark history server page is accessible under https://xxx.cloudx.incorta.com/applications.

It includes the two pages, one for the completed jobs and the other for the incomplete jobs. It looks like this page.

You can download the event logs that is available as a json file.

I won't be able to describe how to read the event logs or how to debug here. The above is about how to access the event logs.

Hope it helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-24-2023 08:56 AM

HI, thanks, unfortunately it is not answer to my question. I know how to access Spark Master and how to read it. What I don't know is how to write to it from Python Spark Materialized View.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-26-2023 05:37 PM

I see. I typically use Incorta Notebook to develop PySpark Materialized Views. We can get immediate feedback by running each paragraph. We can then inspect the data by using some of the functions available in the Incorta Notebook.

Here are examples:

df = read("EBS_PARTY_COMMON.HZ_PARTIES")

incorta.show(df)We can use incorta.show(df) to preview the data.

incorta.printSchema(df)I may show the data type of the schema before proceeding further.

Typing any variable name in a paragraph and running the paragraph means inspecting the value.

dfSome of the python built-functions may help.

type(df)Use type() to confirm the data type of a variable.

Spark actions can be useful as well

df.count() ## to show the number of rows in the dataframe

df.show() ## use incorta.show(df) to get the formated data

df.first(10) ## get the first 10 rowsWe can examine the data using a SQL statement anytime:

df.createOrReplaceTempView("TBL") ## TBL can be any name

spark.sql("""

SELECT col1, col2

FROM TBL --the name given above

WHERE col3 = '123'

""").show()We can view the output below the paragraph.

Hope this helps

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-03-2023 06:52 AM

@Piotrek82X - did this answer your question? The notebook is a great way to interactively view data and outputs during development.

- ML algorithm was given empty dataset. in Data & Schema Discussions

- Materialized view issue in Data & Schema Discussions

- PySpark Regressions using pyspark.ml Library in Dashboards & Analytics Discussions

- Materialized Views with Scala in Data & Schema Discussions

- Trying To Fit A Linear Regression with pyspark.ml in Data & Schema Discussions