- Incorta Community

- Knowledge

- Data & Schemas Knowledgebase

- How to unpivot a source table in Incorta

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 05-26-2022 09:00 AM

Symptoms

Sometimes you may see source data only available as a cross table. Here is a sample data set from USAFACTS US COVID-19 cases. It shows the number of Covid 19 cases by county and date since the year 2020.

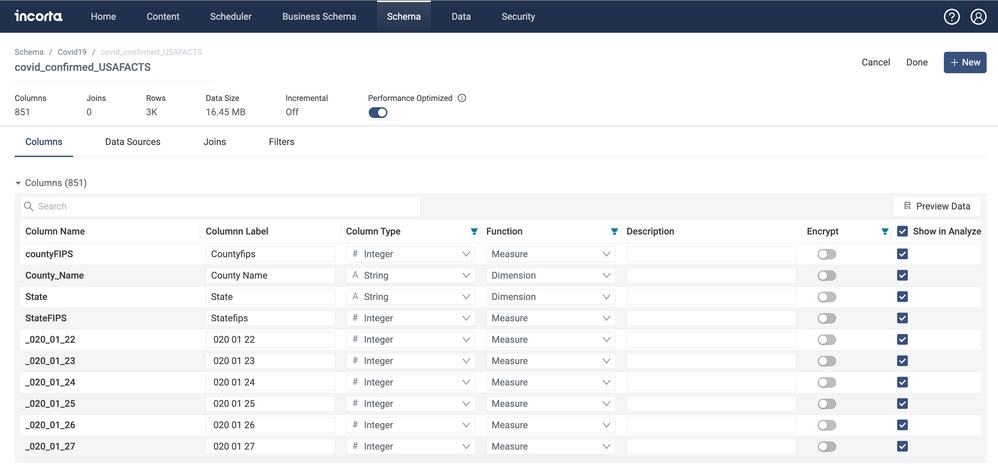

After uploading the data file into Incorta, you can preview it in Incorta:

You may notice that the table is very wide with 851 columns. Each row in this table represents the Covid-19 cases for a US county.

The columns are used to store the number of cases by date with each column representing a date beginning with January 2020.

Tables in this format will be difficult to analyze within Incorta. Rendering of the UI will be slow due to the width of the table and you won't have an easy way to slice and dice the data or show trending of the data with different levels of detail.

Diagnosis

The format may be the result of how the data was initially recorded in a spreadsheet, which allows columns to be extended to the right well into the thousands. Regardless, the data can be loaded into Incorta and can be transformed as Tidy data before performing analysis.

Tidy data is a well known concept for data scientists. It refers to data sets that include rows for observations and columns for variables.

We will convert the US Covid-19 case data set to have a single column for the number of cases and another column to describe the number of cases by dates. Each county will have multiple rows, one row per day.

Solution

Unpivot refers to the process of converting the column names to values of a key column and converting the values of the columns into values of another column.

There are many ways to perform unpivot function within Incorta. This article will show you how to use Incorta Materialized Views (MV) to unpivot data.

Here is the source code you can use:

df = read("Covid19.covid_confirmed_USAFACTS")

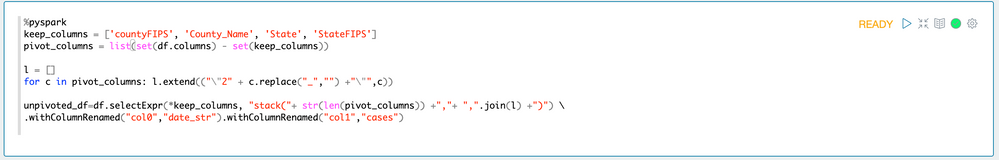

keep_columns = ['countyFIPS', 'County_Name', 'State', 'StateFIPS']

pivot_columns = list(set(df.columns) - set(keep_columns))

l = []

for c in pivot_columns: l.extend(("\"2" + c.replace("_","") +"\"",c))

unpivoted_df=df.selectExpr(*keep_columns, "stack("+ str(len(pivot_columns)) +","+ ",".join(l) +")") \

.withColumnRenamed("col0","date_str").withColumnRenamed("col1","cases")

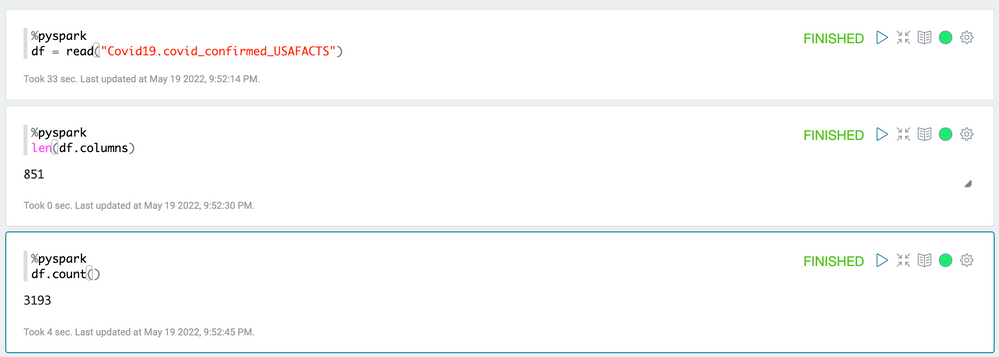

save(unpivoted_df)First, load the data from Incorta to Spark:

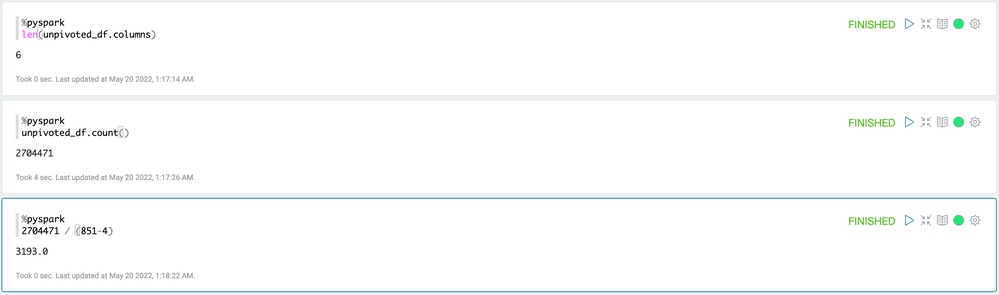

You can see that the table has 851 columns and 3,193 rows

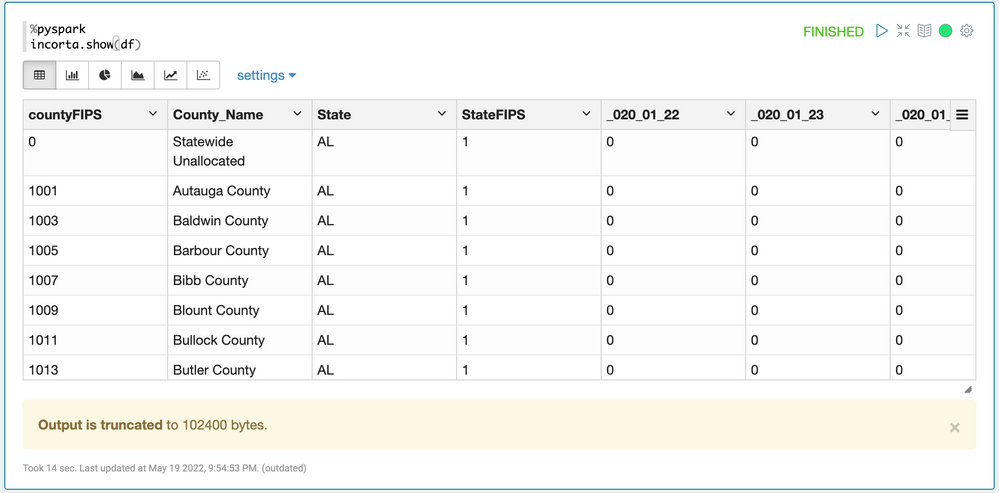

You can preview the data using Incorta Notebook:

Incorta Notebook will not show all the data, but the data shown should be sufficient for you to know that each row is for a US county and the columns, such as _020_01_22, _020_01_23, are for different dates.

The first four columns describe the Covid-19 cases and we will keep them.

The other columns will be stored as values in a new key column and the values will be stored in another new column.

keep_columns are the four columns we would like to keep in the new table.

pivot_columns are the rest of columns which are the dates.

The Spark SQL stack() function can be used to split one row into multiple rows.

The first argument indicates the number of rows. We set it to the number of pivot columns.

The column name is transformed to the yyyyMMdd format, so "_020_01_27" becomes 20200127.

Here is the result of the unpivot data:

The result has six columns and the number of rows matches our assumption, ( 851-4 ) * 3,193 = 2,704,471