- Incorta Community

- Knowledge

- Data & Schemas Knowledgebase

- Delta Sharing

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on

01-26-2023

10:46 AM

- edited on

01-31-2023

03:21 PM

by

![]() Tristan

Tristan

Introduction

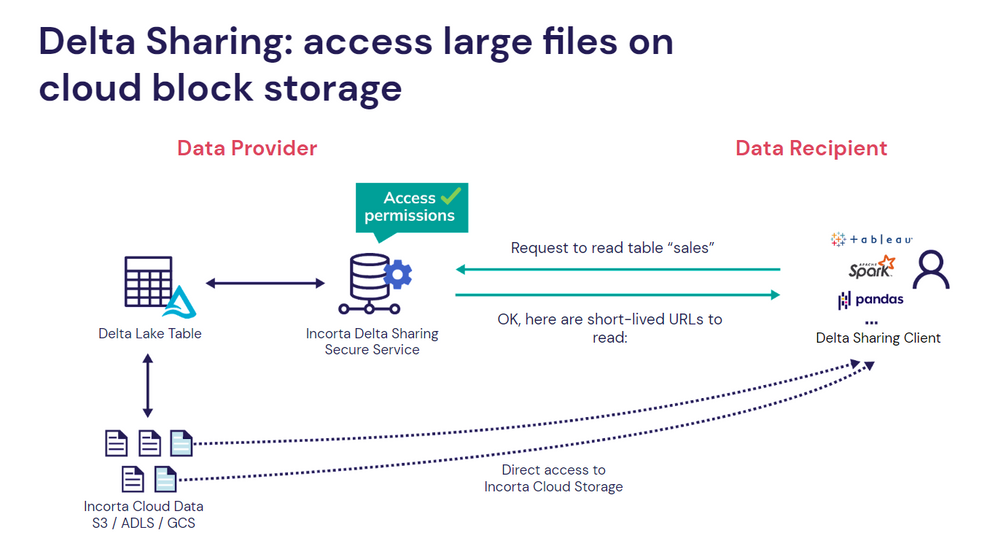

Delta Sharing is a simple REST protocol that securely shares access to part of a cloud dataset. It leverages modern cloud storage systems, such as S3, ADLS, or GCS, to reliably transfer large datasets. There are two parties involved: Data Providers and Data Recipients.

As the Data Provider, Delta Sharing lets you share existing tables or parts (e.g., specific table versions of that table) stored on your cloud data lake in Delta Lake format. The data provider decides what data they want to share and runs a sharing server in front of it that implements the Delta Sharing protocol and manages access for recipients.

As a Data Recipient, all you need is one of the many Delta Sharing clients that support the protocol. In addition, there are open-source connectors for pandas, Apache Spark, Rust, and Python.

The actual exchange is carefully designed to be efficient by leveraging the functionality of cloud storage systems and Delta Lake.

Protocol Benefits

Delta Sharing is an open-source standard for communication with the following properties:

- Cloud storage agnosticism ( Multi-cloud )

- Strong security, privacy, and compliance requirements

- Grants, tracks, and audits access to shared data from a single point of enforcement

Let's Go

How it works

The protocol works as follows:

- First, the recipient's client authenticates to the sharing server (via a bearer token or other method) and asks to query a specific table. (Zaharia et al. 2021)

- The server verifies whether the client can access the data, logs the request, and determines which data to send back. This will be a subset of the data objects in GCS or other cloud storage systems that make up the table. (Zaharia et al. 2021)

- To transfer the data, the server generates short-lived pre-signed URLs that allow the client to read Parquet files that constitute the required data directly from the cloud provider; thus, the transfer can happen in parallel at massive bandwidth without streaming through the sharing server. This powerful feature in all the major clouds makes it fast, cheap, and reliable to share large datasets. (Zaharia et al. 2021)

The server will act as a middleware between GCS and the data recipient. Effectively, it will behave like a consumer of GCS and a producer for the end-user.

A peek under the hood

Delta Sharing uses pre-signed, short-lived URLs; therefore, data is retrieved at the speed of the cloud object storage. As a result, throughput and bandwidth are not limited by the sharing server.

The pre-signed URL is not even visible to the end user. Instead, the user has a share profile file. The file is used inside a client (through either a Python Connector or an Apache Spark Connector ) to request data from the sharing server.

The server sends a pre-signed URL that the client will fetch data through. The endpoint in the profile file is simply the client's access point to the server hosting the middleware.

How to use it

Step 1

The python connector needs a share profile file created by which it can authenticate with the server. The profile file, according to the protocol, is as follows:

{

"shareCredentialsVersion": 1,

"endpoint": "http://{delta-sharing-server-address}:{port}/delta-sharing",

"apikey": "{incorta-user-public-api-key}",

"instanceName": "{incorta-cluster-instance-name}",

"tenantName": "{incorta-tenant-name}"

}

In this example, we will name our share profile file `share.json.`

Step 2: Create a python connector client

import incorta_delta_sharing

# Point to the profile file.

profile_file = "share.json"

# Create a SharingClient.

client = incorta_delta_sharing.IncortaSharingClient(profile_file)

# List all shared tables.

print(client.list_all_tables())

# load data as pandas dataframe (or Spark if you prefer that)

table1_url = profile_file + "#share1.schema1.table1"

table1_pandas_df = incorta_delta_sharing.load_as_pandas(table1_url)

table1_spark_df = incorta_delta_sharing.load_as_spark(table1_url)

Related Material

Zaharia, Matei, et al. "Introducing Delta Sharing: an Open Protocol for Secure Data Sharing." Databricks, 26 May 2021, https://www.databricks.com/blog/2021/05/26/introducing-delta-sharing-an-open-protocol-for-secure-dat.... Accessed 10 January 2023.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@BasemG Is it only for Incorta cloud, or can we use it with the on-prem customer as well?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

We start the delta share server-side process in the Incorta Cloud when the Delta Share is enabled. For on-prem or private cloud env, it is possible to use DeltaShare but the customer needs to start the process by themselves.