- Incorta Community

- Knowledge

- Data & Schemas Knowledgebase

- Incorta Data Delivery of Oracle Cloud ERP to BigQu...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

07-18-2025 12:45 PM - edited 07-18-2025 01:08 PM

Overview

Incorta Data Delivery streamlines the integration between Oracle Cloud ERP and data destinations like Google BigQuery, Snowflake or Databricks. In addition, it accelerates data mart deployment and automates data model design and source schema mapping with Incorta data applications. The data applications provide a new, faster, more practical option for analyzing and understanding your data, with no data modeling or extract, transform, and load (ETL) required.

Incorta's High-Level Data Delivery Process:

- Connect: Using a data application, establish a connection between Incorta and your data source.

- Schema Definition: Load the physical schema tables from your source (e.g., Oracle cloud ERP).

- Data Delivery: Leverage Incorta's Data Destination feature to deliver data to the CDC or Reporting layer. Incorta manages all table creation and updates.

- Efficient Data Transfer: Incorta efficiently transfers data from its internal Parquet storage layer to the corresponding target tables.

- Data Synchronization: Incorta fully supports inserts, updates, and deletes from the source data into target, ensuring data synchronization.

- Visualization: Analyze and visualize the data using Looker or other preferred BI tools.

Installation and Configuration

Follow these steps to install Incorta and configure the pipeline that moves data from Oracle Cloud ERP into BigQuery.

- Create a new Incorta cloud cluster

- Install the ‘Oracle Cloud Applications (BICC)’ connector from the connector marketplace

- From the Incorta Applications marketplace install and configure the Oracle Cloud ERP to Google BigQuery data application. Refer to this guide.

- Ask Incorta for the BIACM customization file and Import into Oracle BIACM, this has the pre-seeded jobs

- Create and configure the BigQuery Data Destination in Incorta. Refer to this guide.

- Please note that the BigQuery dataset (schema) in the BigQuery project needs to be multi-region or the project and the Incorta cluster need to be in the same region.

Star Schema Offering

Incorta delivers reporting ready business views as a part of the Oracle Cloud ERP to BigQuery Data App offerings.

- Built on top of base fusion schemas using Materialized Views (MVs) on star schema like Business Schemas

- Star schema data models in Incorta business schema for Order to Cash, Procure to Pay, General Ledger, HCM, Project modules for Oracle Cloud ERP whose data will be pushed to target.

- A set of Business schemas (with the suffix DM) and Schemas will be delivered as part of this offering. The schemas have the Dimension and Fact tables, which will be delivered to target.

Data Delivery

Following is the high-level Incorta Data Delivery process:

- The Incorta Oracle Cloud ERP to BigQuery data app should be installed & configured according to the steps above.

- Note that the star schemas have a suffix of _ALL (fact and dimension). Configure the data destination for all of them. Refer to this document on how to configure a data destination.

- Make sure that the target schema value in the data destinations for all the _ALL schemas have the same value, for example INCORTA_FUSION_DATA_APP, and have the Target Table Prefix as stated in the table below. This will ensure that all the tables go into one schema and that the Looker blocks will be able to query those tables. Here are the prefixes to be used - also refer to the sample screenshot below.

|

Schema |

Table Prefix |

|

FUSION_AP_FACTS_ALL |

AP_ |

|

FUSION_AR_FACTS_ALL |

AR_ |

|

FUSION_COMMON_DIM_ALL |

CMN_ |

|

FUSION_FA_FACTS_ALL |

FA_ |

|

FUSION_GL_FACTS_ALL |

GL_ |

|

FUSION_HCM_FACTS_ALL |

HCM_ |

|

FUSION_HCM_COMMON_DIM_ALL |

HCM_CMN_ |

|

FUSION_OM_FACTS_ALL |

OM_ |

|

FUSION_PO_FACTS_ALL |

PO_ |

|

FUSION_PRJ_FACTS_ALL |

PRJ_ |

- Create a Load Plan with base schemas and schemas of fact and dimension tables.

- The Load Plan will ingest from the source and deliver to the target.

- Visualize the data with sample views in Incorta or with Looker or another BI tool in the target (BigQuery).

- Refer to the doc for an explanation of the Facts, Dimensions and sample BigQuery Views which can be deployed.

Incorta Objects

This section of the document lists the objects included in the deployment.

Business Schema

|

Module |

Business Schema |

|

Common |

CommonDimensionDM |

|

Order To Cash |

OrderManagementDM ARCashReceiptsDM |

|

Procure to Pay |

PurchaseOrderDM PayablesDM InventoryDM |

|

General Ledger |

GeneralLedgerDM |

|

Fixed Assets |

FixedAssetDM |

|

Projects |

ProjectFinancialsDM |

|

HCM |

HRCommonDimensionDM WorkforceDM |

Schemas

|

Business Process |

Module |

Base Physical Schemas |

Fact and Dimension Physical Schemas |

|

Procure To Pay(p2p) |

Advanced Payables (AP) Procurement (PO) Inventory(INV) |

Fusion_CAL_COMMON Fusion_Item_Common Fusion_HR_Common Fusion_FND_Common Fusion_FIN_Common Fusion_PARTY_COMMON Fusion_AP Fusion_PO Fusion_INV |

FUSION_COMMON_DIM_ALL FUSION_AP_FACTS_ALL FUSION_PO_FACTS_ALL |

|

Order to Cash(o2c) |

Account Receivables (AR) Order Management (OM) |

Fusion_CAL_COMMON Fusion_Item_Common Fusion_HR_Common Fusion_FND_Common Fusion_FIN_Common Fusion_PARTY_COMMON Fusion_AR Fusion_ONT Fusion_AR_SNP Fusion_AR_MV |

FUSION_COMMON_DIM_ALL FUSION_AR_FACTS_ALL FUSION_OM_FACTS_ALL |

|

General Ledger |

General Ledger (GL) |

Fusion_CAL_COMMON Fusion_Item_Common Fusion_HR_Common Fusion_FND_Common Fusion_FIN_Common Fusion_PARTY_COMMON Fusion_GL Fusion_XLA Fusion_XLA_MV |

FUSION_COMMON_DIM_ALL FUSION_GL_FACTS_ALL |

|

Fixed Assets |

Fixed Assets |

Fusion_CAL_COMMON Fusion_Item_Common Fusion_HR_Common Fusion_FND_Common Fusion_FIN_Common Fusion_PARTY_COMMON Fusion_FA |

FUSION_COMMON_DIM_ALL |

|

Projects (PPM) |

Projects (PPM) |

Fusion_CAL_COMMON Fusion_Item_Common Fusion_HR_Common, Fusion_FND_Common Fusion_FIN_Common Fusion_PARTY_COMMON Fusion_PRJ |

FUSION_COMMON_DIM_ALL FUSION_PRJ_FACTS_ALL |

|

HCM Core |

HCM Core |

Fusion_CAL_COMMON Fusion_HR_Common Fusion_HCM |

FUSION_COMMON_DIM_ALL FUSION_HCM_COMMON_DIM_ALL FUSION_HCM_FACTS_ALL |

Loading Data into Incorta

In the Incorta UI, navigate to Scheduler->Load Plans and schedule the load plan for your application.

By default, the load plans are set to incremental, allowing you to load just the latest data changes in Oracle Cloud applications. You can schedule the timing of your incremental loads

by navigating to Scheduler->Load Plans and selecting your Oracle Cloud application load plan.

Click on the Change link to open the Schedule UI, which will allow you to schedule the interval for your Incremental loads.

Load History

The first time a load plan runs, it will execute a full load of each included schema, even if configured to run incrementally. This happens because there is no data in Incorta to append to, so it will pick up everything that is available per the definition of each dataset. This will take care of loading historical data. It is also possible to run load plans as full loads as well and you can find more information about Full Loads in Incorta’s documentation. Note that full loads will remove all data from the tables that are being populated before reloading them fully.

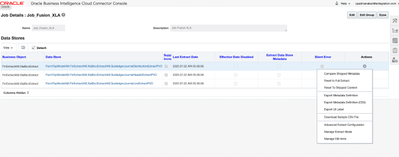

To monitor a load plan, navigate to Schema->Load Plan. You can check the row counts from the various stages of the load - Extracted, Rejected , Loaded, and Sent to Destination.

Incremental Loads

After the initial load, Incorta will continue to run the loads in Incremental mode. Incremental loads handle upserts, i.e. updated records and inserted records, which are pulled into Incorta and then immediately pushed to BigQuery whenever an incremental load executes.

Load Performance Considerations & BICC Error handling

- If the data is simply being passed through to BigQuery, the schema tables can be set to non-optimized mode. This improves performance by skipping the post-load step.

- In the CMC, allocate more on-heap and off-heap memory to the Loader Service than to the Analytics Service, enabling greater capacity for parallel data loading and transmission. For example, allocate 80% of total memory to loader and 20% to analytics.

- Troubleshooting load errors:

- Error: The extraction process was interrupted due to inactivity; no data was fetched for the last [45] minutes

- Error: Failed getting data store files

- Troubleshooting Steps:

- Check that the BICC Job name and Job Id is configured correctly in Fusion data connection.

- Increase the initial delay for this timeout in the Fusion data connection through the option Job Status Check Interval (in Seconds) from 15 to 60 seconds.

- The default job wait time is 1 hour. Check in BICC how long the job takes, and then increase the timeout on the Fusion data connection. Increase the option Max Job Wait Time (in Seconds) (Available in the Advanced Options) to 10800 (3 hours).

- In CMC tenant config Increase the extraction timeout: Under the Data Management table modify the value to 180 (3 hours) or more.

- Error: java.io.IOException error : Premature EOF after all retries

- The EOF error can be overcome by a retry mechanism. The number of retries can be increased by using client.requests.retry.max in the connector extra options.

- To reduce the data being ingested from a Fusion extract:

- Use the Advanced Extract Configuration in BICC

- Open the Job in BICC and on the right hand side select Advanced Extract Configuration and choose a date to use for the initial extract filter. Refer to this Oracle document for more information.

- Add a query filter to a VO in BICC: Edit the Job and then click on the VO and in the ‘Query filter’ section enter a filter to restrict the returned data when you run the query. All column references should follow the format: underscore ( _ )underscore ( _ ) DATASTORE underscore ( _ ) underscore ( _ )dot(.)>BI VO Column Name> . For example select __DATASTORE__.ViewApplicationId=0 where ViewApplicationId is the column name in the BI VO and of data type number. Similarly, to filter results based on timestamp, you can follow the format __DATASTORE__.CostDistributionLinesEOLastUpdateDate >= TIMESTAMP '2019-01-15 07:45:00'

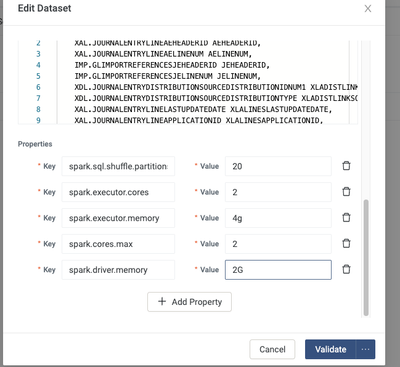

- MV failures: If some of the MVs fail then please open the MV and add the MV properties for cores and memory, for example:

Load Failure Notification

If a load from a source table fails in Incorta, incremental data will not write to BigQuery. Incorta can send an alert to a designated email address to notify an administrator of a failure.

Configure the Incorta tenant to be able to send an email if you wish to receive alerts. Then follow these instructions to set up notifications for the Incorta schemas you wish to track.

Make sure to select Notify Upon Failure and populate the Recipients field with an appropriate email address to monitor load jobs.

Incorta will not attempt to reload the data until the next scheduled load in case of a load failure. You can review the logs in Incorta to determine the cause of the issue and take any necessary steps to address it. If further assistance is required, you can work with Incorta Support to resolve the issue. Once the issue is resolved, data will be caught up the next time a scheduled load occurs. Alternatively, you can manually run a load at the schema or individual table level to catch up on the data.

Steps to Customize the Models

- Adding new columns to existing model

- Edit the correct business schema with the DM suffix, for eg OrderManagementDM

- Add new fields to the correct business view within that, making sure to check the query plan and that the view is validated with a green check mark and Save.

- Now edit the correct MV schema for eg FUSION_OM_FACTS_ALL , open the MV and add the new column, validate and save the mv.

- Load the mv and test the data

- Adding new Dimension to an existing model

- Edit the correct business schema with the DM suffix

- Add a new view and drag new fields from any existing physical schema.

- Make sure that the PK column is correctly named for eg INVOICE_ID

- Check the query plan and that the view is validated with a green check mark and Save

- Now edit the correct MV schema for eg FUSION_OM_FACTS_ALL , add a new MV and add sql query on the new business view, validate and save the mv.

- Load the mv and test the data

- Adding new Fact to an existing model

- Edit the correct business schema with the DM suffix

- Add a new view and drag new fields from any existing physical schema.

- Add the PK column, dimension keys, LAST_UPDATE_DATE and any metrics and attributes columns

- Check the query plan and that the view is validated with a green check mark and Save

- Now edit the correct MV schema for eg FUSION_OM_FACTS_ALL , add a new MV and add sql query on the new business view, Add incremental logic to the MV. Validate and save the mv.

- Load the mv and test the data

Looker Dashboards

As part of this initiative looker dashboards were developed in the areas of Procure To Pay, Order to Cash and General Ledger, we have deployment doc on how to deploy them to a looker environment. For these to work please follow the Target schema and table naming convention mentioned above.

Data Validation

There is a data validation utility which helps to validate the counts and other metrics from Incorta to Big Query, here are install, config & execution details.

Logical Star Schema Models

FAQ

- What is the End-to-End Data Loading Strategy from Oracle Fusion to BigQuery via Incorta?

- Data Loading: Oracle Fusion to Incorta

- The process begins with Oracle Fusion's View Objects (VOs) set up as jobs in Oracle BICC (Business Intelligence Cloud Connector). Incorta's BICC connector triggers the BICC job APIs to initiate data extraction. Upon completion, BICC stores the extracted data as zipped CSV files in Oracle UCM. Once the file lands in UCM, it is streamed and ingested into Incorta.

- BICC manages both full and incremental data extracts automatically, leveraging the last refresh date stored within BICC. Incorta's connector reads only the incremental data files generated by BICC for each VO object, ensuring efficient processing.

- For incremental updates in Incorta, predefined keys are used for supported tables. These keys facilitate updates and inserts in Incorta’s parquet data layer. Every data load generates a new parquet file, and an automated compaction process ensures the latest updates for each row are reflected in the consolidated parquet file.

- Data Transfer: Incorta to BigQuery (BQ)

- When a schema's data destination is configured for BigQuery, Incorta handles the transfer of full and incremental data from its parquet files to BQ. This is done with precision, as Incorta identifies updates in the parquet files from the previous step. The data is sent to BQ using BigQuery APIs.

- Incorta also manages table creation and updates directly within BigQuery, ensuring data integrity. During each load plan execution, row count details are provided, showing the number of rows extracted from the source to Incorta and the number of rows sent to BQ.

- Does Incorta have the option to choose the Job type in BICC? This is to identify Data "Active Primary keys Extract" vs "Application Data Extract" feeds.

- Yes, we support both the options in our data connection . Please check the attached document's section on Handling deletes.

- Can individual pipelines be grouped within Incorta, say by subject area, so ad-hoc pulls can be managed easily?

- Yes, Incorta has a Load Plan feature where it is possible to load multiple schemas. It will automatically take care of all the dependencies. Under Scheduler->Load Plans you can create it, please check this article for more information.

- How does data sequencing work in Incorta data models considering the dependencies and late arrival records?

- Load plans handle all of this. The inserts/updates work based on the keys of the table. If the key is absent then Incorta does an insert into the Parquet file. Otherwise, it does an update.

- Can Incorta transformations look up Google BigQuery data for the incoming BICC data to determine updates/inserts?

- The Incorta Data Destination feature which pushes data from Incorta to BigQuery supports change data capture and automatically pushes the upserts to BIgQuery. As a result, there is no need to worry about this. Incorta also handles all the schema changes and pushes them to the BigQuery side as well.

- Can you touch upon trouble shooting/debugging options in data flows.

- It is simple to quickly build dashboards in Incorta to help in debugging. The load job details screen also shows the source and destination counts for each executed load plan and Incorta can also provide validation scripts which if required.

- What level of access is required to trigger ad-hoc jobs? Is it possible for business users to log in to Incorta and just trigger specific jobs (self managed) without impacting schedules of other load jobs?

- A load plan can be scheduled in Incorta or it can be run ad-hoc. Typically a user with SuperRole role does this.

- A load plan cannot be started if it is already running. This means that if a load plan has been triggered to run manually and is running at the time that it normally scheduled to run, it will skip that scheduled run.

- How does the Oracle Cloud Applications (BICC) connector work?

- Incorta makes a rest API call on the Oracle BICC job which creates a new job_id in BICC and then it looks for Oracle UCM files with that job id and ingests those files. BICC automatically creates incremental files based on the last refresh date it stores at the VO level.

- Typically Incorta only picks one file, but it can ingest the previous one if there is a failure. For example, if a load job fails, the next load will pick up all new BICC generated files.

- For full loads, Incorta checks only the UCM files generated by BICC with a valid manifest file. To skip files in a full load use the Load Files Since field.

- What if full load from BICC always fails?

- Add a filter in the VO or check out the chunking option.

- How do I always do full load for a VO?

- In BICC edit the VO and if you uncheck the option pictured below then the VO extract will always be full load.

- Disable the incremental option in the corresponding Incorta table’s dataset tab and choose the ‘Load last batch’ option.

- In on-premises Incorta server during load we get: Error: java.io.IOException: Cannot run program "chmod": error=24, Too many open files

- Solution: This issue happens because the connector build now has multiple jars, so at the time of startup, the server will try to load all the available connectors, which requires opening all the connector jars; this hits the OS limit of the allowed number of open files per process.

On the server, run this command: ulimit -a output

Check the open files parameter, if it is 1024 then fix it by increasing the number of open files. Edit this file /etc/security/limits.conf and add the following lines:

* soft nproc 65535

* hard nproc 65535

* soft nofile 65535

* hard nofile 65535Restart both the services.

- How do I delete a zip file in UCM?

- Login to UCM, search for the file and click on the ‘i’ icon. Click on the Delete button.

- Does Incorta support Incremental extracts for Oracle Cloud ERP?

- Yes

- How do you keep the VOs updated based on the Oracle releases?

- Oracle can add new columns to the VOs, so if needed, just open and save the table in Incorta to update its definition to include the new columns.

- How do we size the Incorta server?

- Work with your Incorta representative to determine the correct sizing for your use cases.

- We have a DB script for an Oracle database to assist in this process. Many Fusion customers do not have a replicated Oracle Fusion DB as it is very expensive. If you only has access to BICC, then they need to ask Oracle to run the script in the Fusion DB and provide them with the output so that they can provide it to Incorta.

- How do I dynamically load only the last 90 days of data for a table?

- For the use case of dynamic filter, the user can just use the value '90d' in the date filter field to “Load Only Files Created Since“ to load only the files created in the last 90 days before the loading starts.

- How do I query a VO from OTBI?

- Log into OTBI (sample https://<>/analytics) and go to Administration->Issue SQL and then run your query. Examples: select_physical * from "FscmTopModelAM.FinGlCalAccAM.FiscalDayPVO"

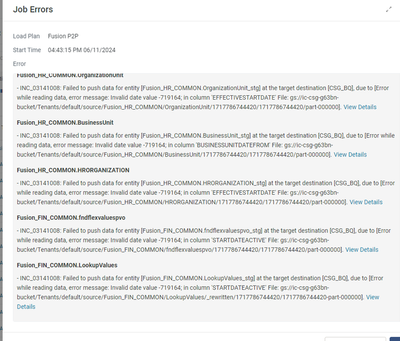

- What do I do if I get a date error while sending data to a BigQuery Data Destination?

- For this error

- Make the CMC tenant config changes, modify the earliest year to 2 and save.

Handling Source Deletes

When loading data incrementally, Incorta inserts new records and updates existing records that have already been loaded, but by default it does not delete records. Source systems like Oracle Cloud ERP, however, may allow users to delete data. This means that if records are deleted in the source, those records may still exist in Incorta. Fortunately, there are options for how to manage source deleted records in Incorta and subsequently BigQuery.

Steps

- Refer to article

- Create a new Job in BICC for the particular VO

- Create a new Connection and set the file type to PECSV and add the Job name and ID pair.

- Create a new Incorta schema for these PK tables and add the PK table using the Schema wizard with this data connection. Keep in mind that the PK table will show all the columns but when you do the load Incorta will only load the PK columns.

- Always do a full load of the PK table. Make sure that the Incremental toggle is turned off for this table and ‘Load Last Batch’ is selected

Refer to the below steps on additional setup to push the corresponding deleted rows to BigQuery

Starting with version 2024.7.3, Incorta has a feature that allows you to purge records from Incorta that have been deleted in the source system. The purge feature is implemented by creating an exclusion set to compare against the table in Incorta that has deletes to track and then running a purge job. The purge job will physically delete records from parquet and memory and then push the corresponding delete statements to BigQuery.

Delete Handling Setup

For each table that needs delete handling, the first step is to create a corresponding skinny table that contains only the primary key column(s) for the table and that is always loaded in full. It is recommended that these tables be placed into a separate schema whose only purpose is to hold these types of tables.

The second step is to create a Materialized View (MV) that will compare the PK table against the base table to create the exclusion set which represents the records that have been deleted in the source table. The MV can be placed in the schema with the PK table. Here is an example of the MV code you would write to create the table that holds your exclusion set.

SELECT I.INVOICE_ID

FROM FUSION_AP.AP_INVOICES_ALL I

ANTI JOIN PK_SCHEMA.AP_INVOICES_ALL_PK P

ON I.INVOICE_ID = P.INVOICE_IDBest Practice

- Say we have a base schema S1 with 5 tables which need delete handling

- Create a new schema S2 with PK tables (PK table always loaded fully) for each of the S1 tables which need delete handling

- In S2 add the MV per S1 table, this MV has the exclusion dataset

- Note: S2 can be fully non optimized as we do need to load any data to memory for those tables, that will save post load time

- We will create 2 LPs, L1 runs before L2

- L1 -> Will have two groups

- Group 1 -> S2 with full load type

- Group 2 -> S1 with purge load type

- L2 -> S1 with incremental load type

Configure Delete Handling

Next, configure your table to use the exclusion set table that you have created by opening the Advanced settings tab.

Toggle on the Synchronizing delete operations option and select the exclusion set identifier schema and table.

Define the column mappings between the target table and the exclusion set and save.

Purge Deleted Records

- The next step to delete records from Incorta is to run a purge job. This can be run table by table as needed or can be scheduled from a separate Load Plan with Data purge option. The purge job will remove records from Incorta .

- Once the above Load plan runs please run a regular incremental Load plan to sync up the corresponding table in the Target CDC layer.

Pre-Seeded Delete Handling Configuration

Most Oracle Cloud applications customers do not delete records from their tables, so delete handling is not enabled automatically. Incorta delivers pre-seeded schemas for delete handling with sample PK tables and Exclusion Set MVs that can be enabled and used as models for setting up delete handling with additional tables if needed.

To enable the delivered configuration for the tables used, follow the instructions in the Configure Delete Handling section above. To add delete handling for new tables, follow the instructions above starting with the Delete Handling Setup section and using the provided samples as models.

Appendix

- Incorta doc on oracle cloud to Bigquery data delivery

- Connecting and Pushing Data to BigQuery

- Configuring Snowflake as a data destination

- Refer to the Connecting and Pushing Data to BigQuery article on Docs for instructions on how to connect Incorta to your BigQuery instance.

- Create Incorta Developer Edition On GCP With GCS Bucket As Tenant Directory

- Community article on how to setup Oracle Data lineage for VOs