Create an ODBC Connection to Incorta

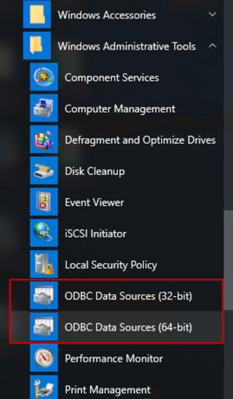

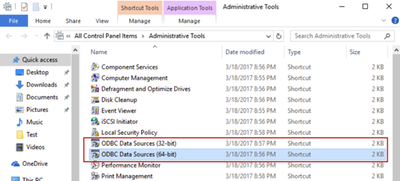

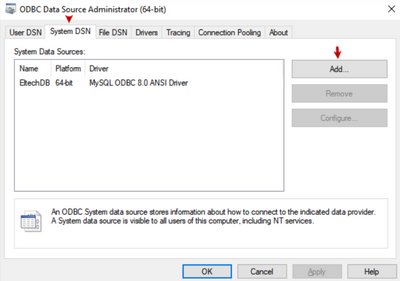

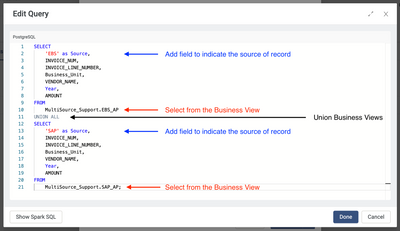

Applies to: Any Incorta version On-Prem/Cloud Question: How to configure an ODBC connection?Unable to connect to Incorta using ODBC Overview: Any program that uses Open Database Connectivity (ODBC) to connect to a remote database needs a way to iden...